At C.H. Robinson, we love open source, and we’re continuing to give back by releasing a foundational component of our event-driven messaging platform, Chr.Avro! We use this library throughout the organization to transmit data between applications.

Why Avro?

When applications exchange data, they need to agree on a serialization format—the sending application has to write the data so that it can be read by receiving applications. For example, here’s a message serialized using the JSON format:

Text-based serialization formats like JSON are popular because they’re fairly human-readable. Most English-speaking people would be able to understand that message even though it’s written in a format designed for machines.

However, text-based formats aren’t always ideal. One major downside is that they include a lot of structural information. This is what the JSON data looks like represented in bytes:

The critical information (shaded blue) contributes less than half of the total data, about 41%. What if there was a way to eliminate the rest of it?

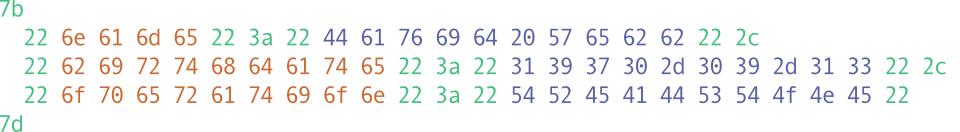

Avro’s answer to that question is the schema, a document that describes the shape of a message. An Avro schema for the message above might look something like this:

![{

"name": "cia.sad.Operative",

"type": "record",

"fields": [

{

"name": "name",

"type": "string"

},

{

"name": "birthdate",

"type": {

"type": "int",

"logicalType": "date"

}

},

{

"name": "operation",

"type": {

"name": "cia.sad.Operation",

"type": "enum",

"symbols": ["SILVERLAKE", "TREADSTONE", "BLACKBRIAR"]

}

}

]

}](https://engineering.chrobinson.com/images/posts/2019/bourne-schema.png)

If the sending and receiving applications all know the schema in advance, the same data can be transmitted in a significantly smaller form:

This Avro message is about 81% smaller than the JSON message. At Robinson’s scale, that level of reduction in size can save gigabytes of storage and network traffic every day. More importantly, Avro helps us ensure that every message flowing through our systems is encoded correctly.

Avro isn’t the only binary serialization format out there. Other formats, like Protocol Buffers and Thrift, also rely on schemas to enforce message shape and reduce size. We chose Avro for a few reasons:

-

Tools in the Kafka ecosystem tend to have first-class support for Avro, in no small part because it’s closely tied to Hadoop and endorsed by providers like Confluent.

-

As Confluent notes in their blog post on the subject, Avro makes it easier to evolve schemas over time. Guaranteeing that new versions of a schema will continue to be compatible with old versions keeps us responsive to changing business needs without sacrificing safety.

-

Avro doesn’t demand code generation. Code generation tools are available for most popular programming languages, but using them isn’t a hard requirement.

Building Chr.Avro

As we started to experiment with Avro, we were unable to find any practical ways to map Avro schemas to our .NET data models. The Apache Avro library for .NET only supported mapping Avro records to generated classes or an untyped generic class, and an Avro library released by Microsoft in 2014 had since been deprecated.

We chose to develop our own Avro library, and now we’re ready to open it up to the community at large. In addition to its core serialization and mapping functionality, Chr.Avro includes some features that make it easier to work with Avro:

-

An Avro schema builder: Chr.Avro inspects .NET types and generates compatible Avro schemas, a time-saver for teams that already have complex .NET models.

-

Rudimentary code generation: Given record and enum schemas, Chr.Avro can generate matching C# types.

-

Confluent Kafka client integration: Chr.Avro integrates with Confluent’s client libraries, reducing the amount of effort required to build Avro-enabled consumers and producers.

Next Steps

C.H. Robinson has already benefited from this project, but we’re not done yet. We have more features in the pipeline that will continue to make it easier to use Avro in .NET applications. Check out the project on GitHub or learn more on its website.

We’re hopeful that developing Chr.Avro as an open source project will enable us to refine it at a higher velocity, and we’re extremely excited to share it with the community!